Dolby Vision, HDR10, HDR10+, HLG, Advanced HDR … you are not the only one to be confused by the HDR technology alphabet soup available today. In this guide, you will understand what each image display format is all about, as well as its advantages and disadvantages when watching movies or playing games.

Dolby Vision, HDR10, HDR10 +, HLG and Advanced HDR

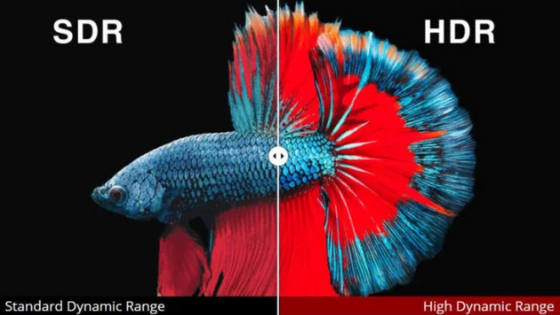

High Dynamic Range HDR technology enables content reproduction with enhanced color fidelity and contrast. The dynamic range value, measured in nits, establishes the difference in brightness between the darkest and lightest point a screen can reproduce. The higher it is, the higher the contrast.

Compared to a standard TV called SDR (Standard Dynamic Range), an HDR-equipped screen has a much higher nits value (ie, greater distance between bright and dark dots). This enables them to identify illuminated spots more accurately, delivering better contrast, more definition in dark scenes, and greater color fidelity.

There are 5 main HDR standards that compete in the market and are more or less similar, with advantages and disadvantages. Content (movie, series, or game) may be compatible with one standard and not with others or more than one. If you remember the Blu-ray x HD-DVD fight or the older VHS x Betamax fight, you’re on the right track.

1. Dolby Vision

Dolby Vision is Dolby Laboratories’ proprietary HDR standard, being one of the first introduced in TVs, along with the HDR10. It supports a theoretical standard brightness of 10,000 nits and brings a 12-bit color depth, making it the highest quality HDR on the market today. However, all this power comes at a price: Dolby charges royalties for using the technology on TVs, media players, phones, tablets, consoles, etc., as well as in movies, series, videos and games.

2. HDR10

Horizon: Zero Dawn running on PS4 Pro: HDR10 (left) and SDR (right)

Horizon: Zero Dawn running on PS4 Pro: HDR10 (left) and SDR (right)

The HDR10 was developed by a partnership between Sony and Samsung. The format is the standard on 4K Blu-ray Discs and has been adopted by PS4 Pro, Xbox One S and Xbox One X. It has a brightness of 1,000 nits and 10-bit color depth. The HDR10 is not “dynamic” either: it uses fixed metadata to provide brightness information, which gives less than optimal results. In darker scenes, colors become saturated, while in Dolby Vision, metadata is dynamic and brightness adjusts to the scene. On the other hand, HDR10 is free and open source and can be used by manufacturers.

3. HDR10 +

The HDR10 + standard was the second attempt by Sony and Samsung to clash with Dolby Vision. It was born to correct the HDR10’s lack of dynamic tone mapping by automatically correcting the contrast from one scene to another. However, the format remains at the same brightness and color depth values as the previous generation, 1,000 nits and 10 bits. Still, the HDR10 + still has the advantage of being an open format and can be used for free by manufacturers.

4. HLG

The HLG, Hybrid acronym Log Gamma (Gamma registration Hybrid) was created by the BBC and NHK stations. Focused on live streaming (unlike streaming and home video), it ignores metadata for brightness calculation, leaving the screen in charge of what to play, even working on SDR screens. On an old TV, will display normal images. On a newer TV you will have access to live HDR. Although image quality is better than SDR, HLG is slightly lower than HDR10 (+) and Dolby Vision.

5. Advanced HDR

The Advanced HDR Technicolor has three distinct sub – patterns HDR.

Each with a purpose:

- SL-HDR1: Uses HLG as a base for TV broadcasting;

and compatible with older SDR displays; - SL-HDR2: Similar to HDR10 + and Dolby Vision;

with metadata for dynamic tone mapping; - SL-HDR3: Enhanced version of SL-HDR1;

introduces metadata for dynamic tone adjustment in live; still in the testing phase.