The Instagram will begin to alert users that they are about to post a potentially offensive caption on photos or videos in the feed, offering the opportunity to edit the message before posting it. The news, whose purpose is to combat online bullying, was announced on Monday (16) by the social network.

The tool is based on artificial intelligence that can recognize different forms of bullying on Instagram, the same one used since July to identify possible offenses in the comments posted on the platform.

According to the company, the results obtained by using this feature in the comments were promising, showing that this kind of notification can “encourage people to reconsider their words when given a chance”.

The news helps fight online bullying. (Source: Instagram/Press Release)

The news helps fight online bullying. (Source: Instagram/Press Release)In October, the social network also launched the Restrict feature, which helps protect users from unwanted interactions by limiting the viewing of comments and direct messages from people who send derogatory words too often.

How it works

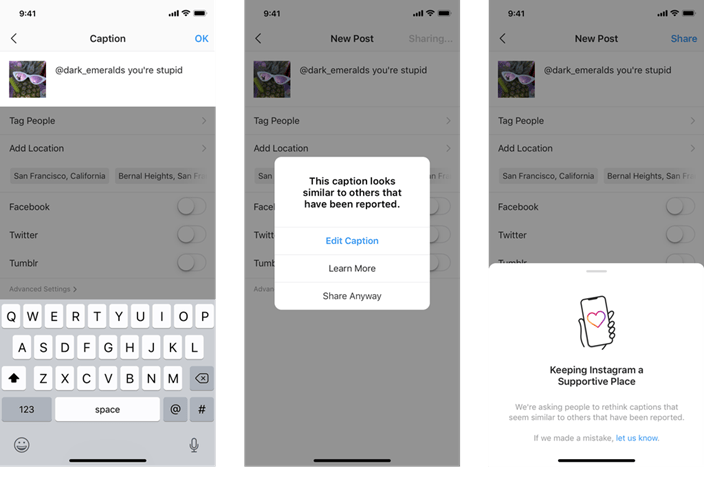

Instagram’s new moderation feature will kick in whenever a user is typing captions. If artificial intelligence identifies something offensive in the text, it will alert you that the caption is similar to others reported as bullying.

Given the alert, the user will be encouraged to rewrite the subtitle using different terms, but can also post it the way it is, without changes if desired.

According to Instagram, the new feature, which also makes it possible to educate people about what is not allowed on the social network and to show when an account may be at risk of violating the platform rules, will reach some selected countries first if expanding to the world in the coming months.